Appendix

8.1 SPDE and Triangulation

In order to fit LGM type of models with a spatial component INLA uses SPDE (Stochastic Partial Differential Equations) approach. Suppose that is it given have a continuous Gaussian random process (a general continuous surface), what SPDE does is to approximate the continuous process by a discrete Gaussian process using a triangulation of the region of the study. Let assume to have a set of points defined by a CRS system (Latitude and Longitude, Easthings and Northings), and let assume that the object of the analysis is to estimate a spatially continuous process. Instead of exploiting this property as a whole, it is estimated the process only at the vertices of this triangulation. This requires to put a Triangulation of the region of the study on top the the process and the software will predict the value of the process at its vertices, then it interpolates the rest of the points obtaining a “scattered” surface.

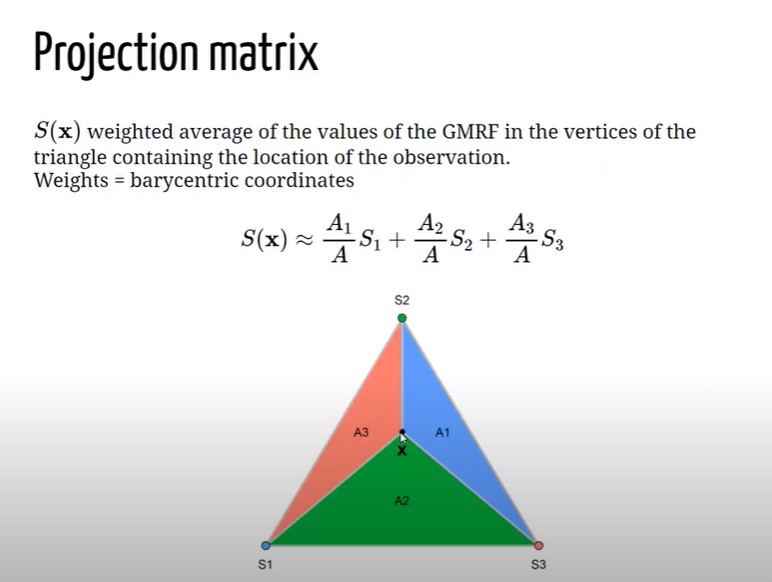

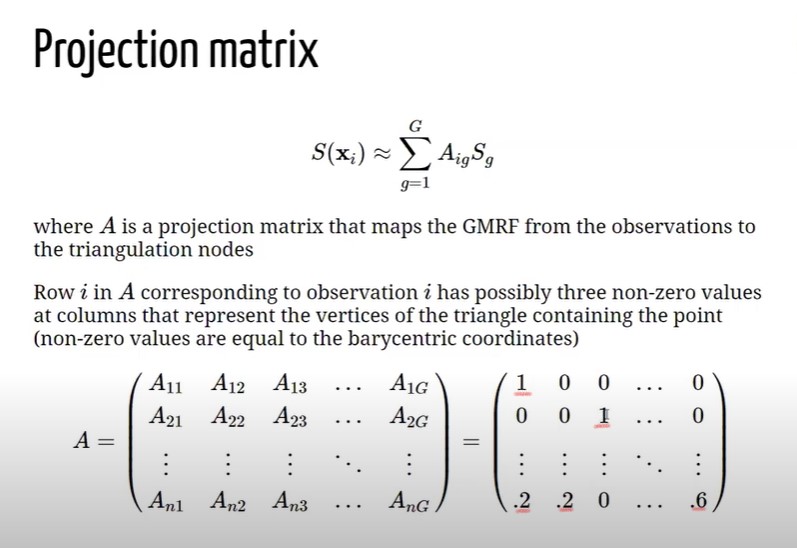

Imagine to have a point a location X laying inside a triangle whose vertices are . SPDE operates by setting the values of the process at location x equal to the value of the process at their vertices with some weights, and the weights are given by the Baricentric coordinates (BC). BC are proportional to the area at the point and the vertices. Let assume to have a piece of Triangulation and let assume that the goal is to compute the value at location X. X, as in formula above , would be equal to , multiplied by the area dived by the whole triangle area () + multiplied by the area divided by + , multiplied by the area dived by (. This would be the value of the process at location X given the triangulations (number of vertices). SPDE is actually approximating the value of the process using a weighted average of the value of the process at the triangle vertices which ir proportional to the area of the below triangle. In order to do this within INLA 4 it is needed also a Projection Matrix , figure 8.4. The Projection matrix maps the continuous GRMF (when it is assumed a GP) from the observation to the triangulation. It essentially assigns the height of the triangle for each vertex of the Triangulation to the process. Matrix , whose dimensions are . It has rows a columns, where is the number of observations and is the number of vertices of the triangulation. Each row has possibly three non-0 values, right matrix in figure 8.4, and the columns represent the vertices of the triangles that contains the point. Assume to have an observation that coincides with a vertex of the triangle in 8.3, since the point is on top of the vertex (not inside), there are no weights () and 1 would be the value at and 0 would be the rest f the values in the row. Now let assume to have an observation coinciding with (vertex in position 3), then the result for would be 1 and the rest 0. Indeed when tha value is X that lies within one of the triangles all the elements of the rows will be 0, but three elements in the row corresponding of the p osition of the vertices , as a result X will be weighted down for the areas.

8.2 Laplace Approximation

Michela Blangiardo Marta; Cameletti (2015) offers an INLA focused intuiton on how the Laplace approximation works for integrals. Let assume that the interest is to compute the follwing integral, assuming the notation followed throughout the analysis:

Where is a random variable for which it is specified a distribution function . Then by the Taylor series expansions (Fosso-Tande 2008) it is possible to represent the evaluated in an exact point , so that:

Then if it is assumed that is set equal to the mode of the distribution (the highest point), for which , then the first order derivative with respect to is 0, i.e. . That comes natural since once the function reaches its peak, i.e. the max then the derivative in that point is 0. Then by leaving out the first derivative element in eq. (8.1) it is obtained:

Then by integrating what remained, exponantiating and taking out non integrable terms,

At this point it might be already intuited the expression (8.2) is actually the density of a Normal. As a matter of fact, by imposing , then expression (8.2) can be rewritten as:

Furthermore the integrand is the Kernel of the Normal distribution having mean equal to the mode and variance specified as . By computing the finite integral of (8.3) on the closed neighbor the approximation becomes:

where is the cumulative distribution function corresponding value of the Normal disttribution in eq. (8.3).

For example consider the Chi-squared density function (since it is easily differentiable and Gamma term in denominator is constant). The following quantities of interest are the , then the , which has to be set equal to 0 to find the integrating point, and finally . The pdf, whose support is and whose degrees of freedom are :

for which are computed:

Ths single variable Score Function and the ,

And the Fisher Information in the (for which it is known )

finally,

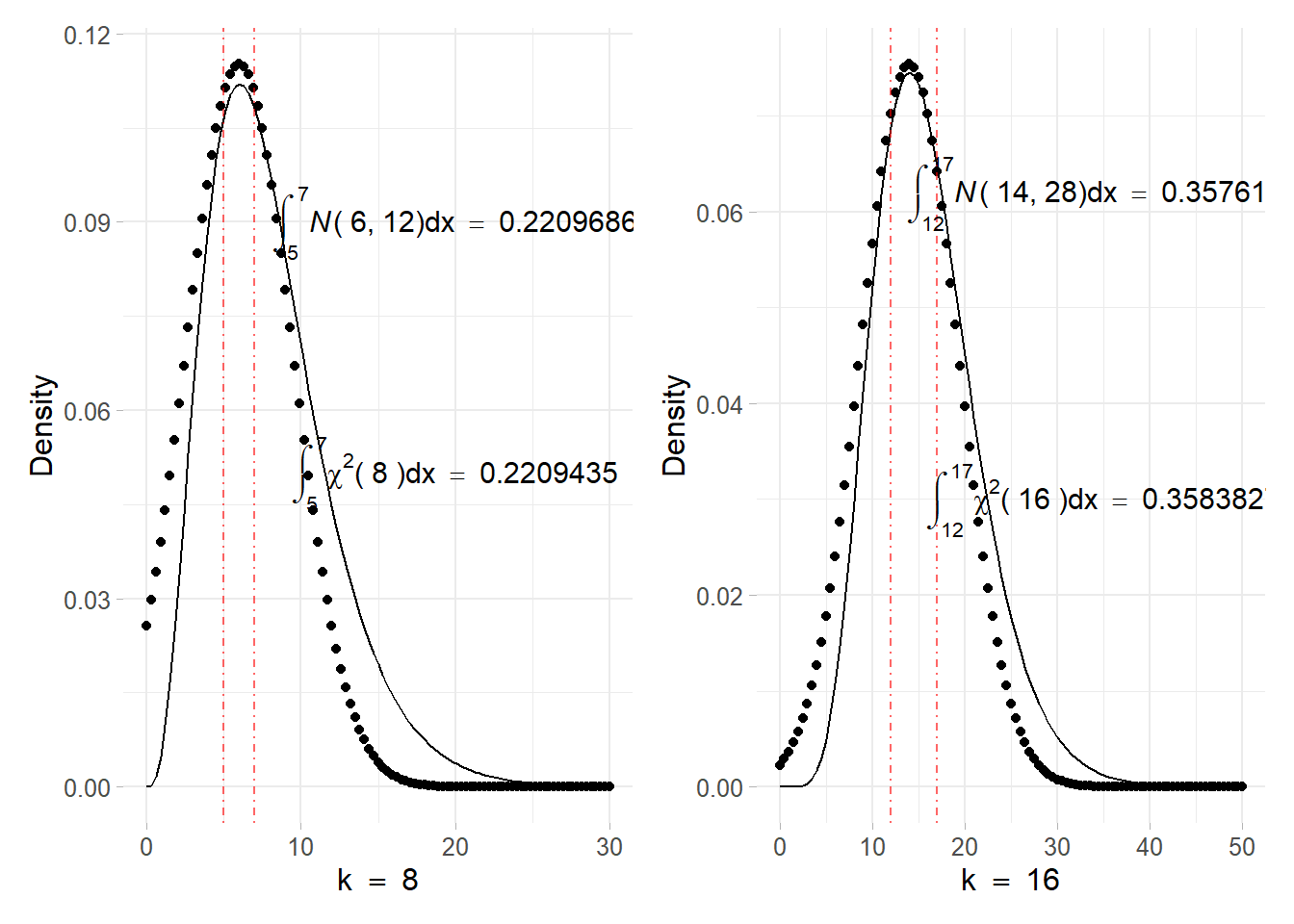

Assuming degrees of freedom densities against their Laplace approximation, the figure 8.5 displays how the approximations fits the real density. Integrals are computed in the case of in the interval , leading to a very good Normal approximation that slightly differ from the orginal CHisquared. The same has been done for the case, whose interval is showing other very good approximations. Note that the more are the degrees of freedom the more the chi-squared approximates the Normal leading to better approximations.

Figure 8.5: Chisquared density function with parameter (top) and (down) solid line. The point line refers to the corresponding Normal approximation obtained using the Laplace method