Chapter 5 Geostatistical Data Analysis

Geostatistical or equivalently point reference data are a collection of samples indexed by coordinates pertaining to a spatially continuous surface (Moraga 2019). Coordinates might be in the form of Latitude and Longitude or Eastings and Northings, moreover they can be also unprojected with respect to a Coordinate Reference Systems (CRS e.g. lat and log), or projected (e.g. East. and North.). Data as such can monitor a vast range of phenomena, e.g. accidental fisheries bycatch for endangered species (Cosandey-Godin et al. 2015), COVID19 severity and case fatality rates in Spain (Moraga et al. 2020), PM10 pollution concentration in a North-Italian region Piemonte (Cameletti et al. 2012). Moreover a large geostatistical application takes place on Real Estate e.g. Boston house prices lattice data (mass?) are ingested by several spatial models (Bivand, Gómez Rubio, and Rue 2015), or spatio-temporal modeling for apartment transaction prices in Corsica (FR) (Ling 2019). All the examples taken before have implied inla and might have documented a spatial nature of data according to which closer observations were displaying similar values. This phenomenon is named spatial autocorrelation. Spatial autocorrelation conceptually stems from geographer Waldo Tobler whose famous quote, known as first law of geography, inspires geostatisticians:

“Everything is related to everything else, but near things are more related than distant things”

Spatial data can be partitioned into three main types, even tough they all fall under the umbrella of inla algorithm, indeed each employs context specific tools.

- Areal Data

- Point Referenced Data

- Point Pattern Data

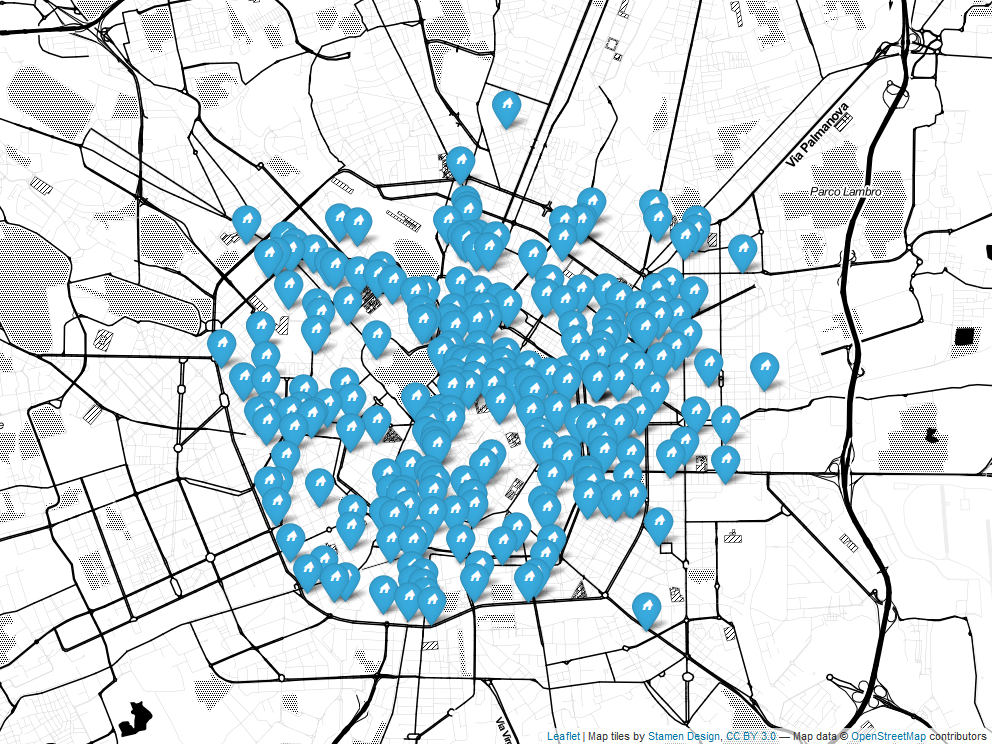

Figure 5.1: Point Referenced Data map plot (Leaflet Cheng, Karambelkar, and Xie (2019)) of a RESTful API call on Milan Rental Real Estate 20-11-2019, Author’s Source

In this chapter Gaussian Processes are seen as the continuous surface from which point referenced data are the partial realization. At first they are defined, then aer constrained to the properties of Stationarity and Isotropy. Furthermore they are considered with a suitable covariance function, i.e. Matèrn that uncovers convenient properties. These properties and the reason why Matérn is selected as candidate relies, besides its inner flexibility, on the fact that GP whose covariance function is Matérn are able to determine a GMRF 4.2 representation of the process through the Stochastic Partial Differential Equations (SPDE) approach (Lindgren, Rue, and Lindström 2011). The main benefit proceeding from a GP to a GMRF arises from the good computational properties that the latter appreciate enabling to wrap up modeling around INLA and consequently benefiting from its speed. Hedonic Price Models brings to the present analysis the theoretical foundation according to which covariates are added to the model. Then model criticism in the context of INLA is reviewed introducing two methodologies to assess model suitability based on predictive posterior distribution and deviance. In the end the prior choice falls on penalized complexity priors (Simpson et al. 2017) which constitutes a set of effective guidelines to choose priors that are compatible and natively implemented in INLA.

5.1 Gaussian Process (GP)

Geostatistical data are defined as realizations of a stochastic process indexed by space.

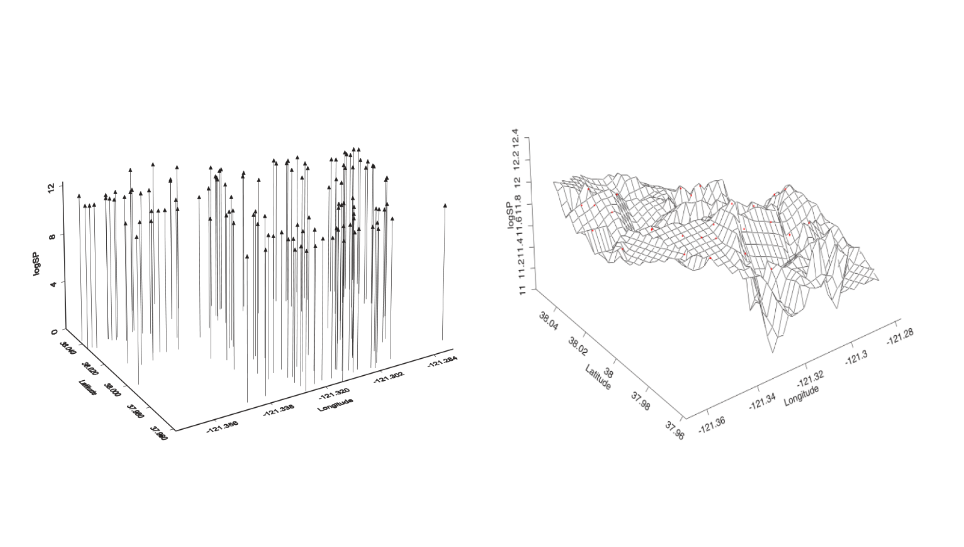

where is a (fixed) subset of (in the present work Latitude and Longitude, i.e. ). The actual data can be then represented by a collection of observations (recall notation from previous chapter 4.1) where the set points to the spatial location where data has been observed. For example, following Cameletti et al. (2012), let us assume to have collection of samples from air pollutant measurements obtained by observing a set of monitoring stations. Then The stochastic process is monitored in a fixed set of spatial indexes corresponding to the station locations (upword arrows left in figure 5.2). This information is essential to interpolate points and build a spatially continuous surface (right panel in figure 5.2) over the y-studied variable domain in order to predict the phenomenon at locations not yet observed (Paci 2020). Note that notation such as from now on will be discarded in favor of the subscript for the correspondent observation indexes i.e. .

Figure 5.2: Stockton data, Left: Spatial drop line scatterplot projected into the dim , Right: its three-dimensional surface , source Michela Blangiardo Marta; Cameletti (2015)

The first step in defining a spatial model within INLA is to impose a LGM hierarchical structure which requires at first to identify a probability distribution function for the observed data , i.e. higher level. The most common choice is to draw distributions from the Exponential family, indexed by a set of parameters as in 4.1, accounting for the spatial correlation. In the case of geostatistical data, the model parameters , following notation imposed in chapter 4 are defined as a latent Gaussian Process (GP). Then a formal definition of GP is given,

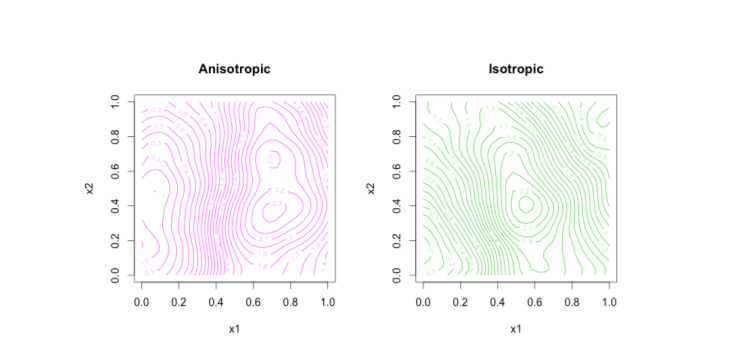

The latent GP are in function of some hyper-parameters and their respective prior . Moreover a GP is completely characterized by a mean and a spatially structured covariance matrix , whose generic element is , where is the variance component of the process and for . function generally ensures that all the values that are close together in input space will produce output values that are close together, by inheriting the validity and positive definitness characteristics from the GP. The spatial stochastic process commonly is assumed to fulfill two important properties: stationary, Isotropy (even tough both of the two can be relaxed). A process is said stationary i.e. weak stationary, if process values at any two locations can be summarized by a covariance function depending solely on the distance. In other words it is invariant under translation (E. T. Krainski 2019). A process is said Isotropical if the covariance function depends only on the between-points distance (in this context Euclidean), so it is invariant under rotation (2019). A further way of seeing this property is that Isotropy causes to stochastic processes concentric decaying contours (Paci 2020), green in fig. 5.3, meaning the vanishing of spatial dependence (Michela Blangiardo Marta; Cameletti 2015), and so for covariance values.

Figure 5.3: Left: anisotropical concentric decaying contours, Right: isotropical concentric decaying contours , source Blanchet-Scalliet et al. (2019)

In spatial statistics the isotropical assumption is very frequent despite being restrictive for describing the rich variety of interactions that can characterize spatial processes. Anyway assuming the property it offers a wide range of underlying functions that can model spatial dependence for which three are the most common ones (E. Krainski et al. 2018)

where all the parameters above are special quantities derived from the emphirical variogram. , , , and are respectively the range, the distance at which correlation vanishes, the nugget, i.e. the non spatial variance and the partial sill, i.e. the spatial effect variance (Paci 2020). In particular the focus is on the Matérn – as it is required by the SPDE approach in section 5.2 – and this should not be intended as a restriction. In fact, as long described in Gneiting, Genton, and Guttorp (2006), the Matèrn family is a very flexible class. Matérn is tuned mainly by two hyper-parameters, a scaling one , usually set equal to the range of the spatial process i.e. the distance at which the spatial dependence becomes negligible, by the empirically derived relation ), and a smoothing one usually kept fixed. An isotropical and stationary Matérn covariance expression by isolating the variance of the spatial process is:

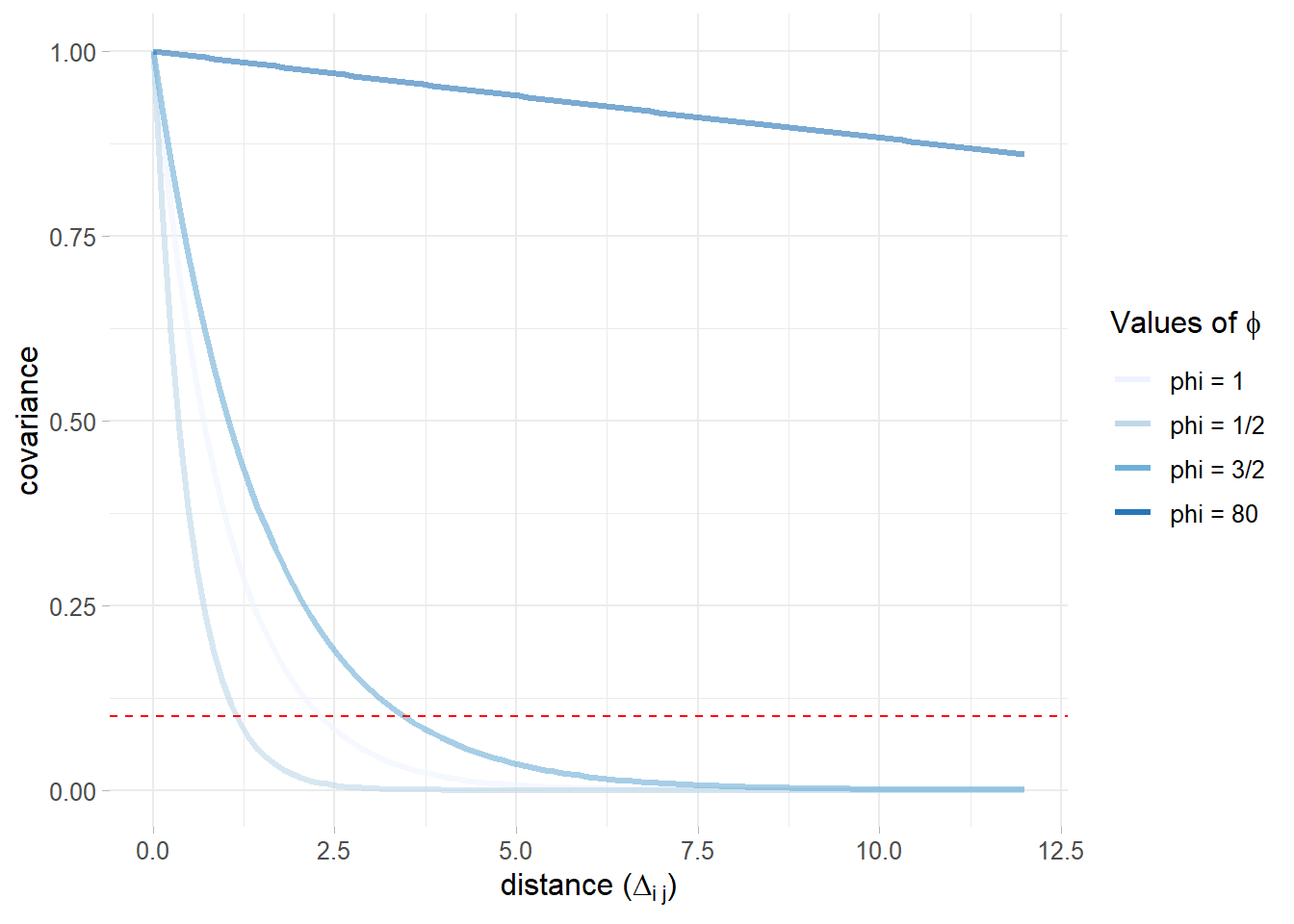

is a Gamma function depending on values and is a modified Bessel function of second kind (Yaşar and Özarslan 2016). The scaling parameter in figure 5.4 takes 4 different values showing the flexibility of Matérn to relate different distances according to varying parameters, while is kept to the value of and addresses the GP degree of smoothness. Looking at the functions in figure 5.4 and their interception with horizontal red line, when , it smoothly falls towards 0 covariance and spatial dependece decays at . When it becomes the exponential covariance function and rapidly pushes covariance to 0, When it uncovers a convenient closed form (Paci 2020) for which spatial dependence vanishes at . When , (here for graphics reasons), it becomes Gaussian covariance function. In the the case shown in section 5.2 the range, for any , is set equal to the distance at which dependence vanishes below .1, which is actually what the red line points out.

Figure 5.4: Matérn covariance function for 4 different phi values and fixed nu = .5, dashed red horizontal line when covariance is .1

In the end summarizing the result obtained (abuse of notation with to make it clear that is a spatial process) in chapter 4 with what it has been seen so far, the Gaussian process assumes the following measurement equation, where is the latent field, is the white noise error and is an identity matrix and .

5.2 The Stochastic Partial Differential Equation (SPDE) approach

Locations in the spatial setting are considered as realizations of a stationary, isotropical unobserved GP to be estimated (5.1). Before approaching the problem with SPDE, GPs were treated as multivariate Gaussian densities and Cholesky factorizations were applied on the covariance matrices and then fitted with likelihood (Paci 2020). Covariance matrices in the context of spatial and spatio-temporal models (Paci et al. 2017; Cameletti et al. 2012) are dimension matrices defined by the number of observations at each single point location (at each time stamp in spatio-temporal) (Marta Blangiardo et al. 2013). Covariance matrix as such are very dense and they were scaling with the order of (Banerjee, Carlin, and Gelfand 2014). Problem were linked to the computational costs needed for linear algebra operations for model fitting and spatial interpolation as well as prediction (Cameletti et al. 2012), having led to obvious big-n problem. The breakthrough came with Lindgren, Rue, and Lindström (2011) that proves that a stationary, isotropical (can be both relaxed at the cost of different settings) GP with Matérn covariance can be represented as a GMRF using SPDE solutions by Finite Element Method (E. T. Krainski 2019). In other words given a GP whose covariance matrix is , SPDE can provide a method to approximate without the previous computational constraints. As a matter of fact SPDE are equations whose solutions are GPs with a chosen covariance function focused on satisfying the relationship SPDE specifies (2019). Benefits are many but the most important is that the representation of the GP through a GMRF provides a sparse representation of the spatial effect through a sparse precision matrix . Sparse matrices enable convenient inner computation properties of GMRF 4.3 which are exploited by INLA algorithm 4 leading to a more feasible big-O . Mathematical details and deep understanding of the equations in SPDE are beyond the scope of the analysis. Luckily enough R-INLA has a set of functions that makes clear to the practitioner the minimal requirements to pass from discrete locations to their continuously indexed surface alter-ego. As a result of SPDE the spatial Matérn field i.e. the spatial process becomes , where the precision matrix comes from the SPDE representation (2012).

In few words SPDE approach uses a finite element (FEM method) representation to shape the Matérn field as a linear combination of basis functions defined on a triangulation (Delaunay) of the domain (2012), also named mesh. What it internally does is splitting the domain into a number of non-intersecting triangles which converge in a common edge or corner. Then the initial vertices of the triangles are set at . In order to get a proper triangulation, useful for spatial prediction, additional vertices are then added. The more vertices are added the more the triangulation is accurate since many more triangles can better interpolate the surface reaching more complex shapes. On the other side the more are the triangle the more it will take to compute the mesh and INLA performances can be damaged.

Secondly SPDE maps with a Projection matrix the values of the triangulation to the discretized spatial surface with weighted sum of areas of the underlying triangles.

A less superficial intuition on how SPDE computes triangularizes values and how it uses the projection matrix based on a weighted sum of basis functions to inject the triangulation into the GRMF is offered in the appendix in section 8.1.

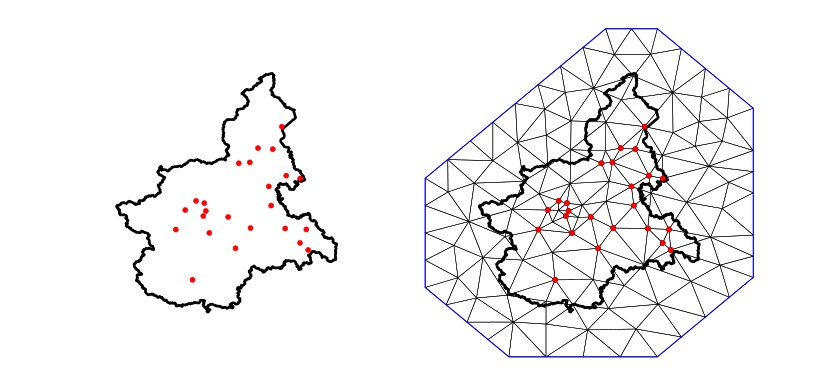

To illustrate the concept of triangulation Cameletti et al. (2012) provide a scatterplot for Piemonte PM10 concentration observed at 24 monitoring stations left in figure 5.5. Its respective mesh using 123 vertices and Piemonte borders is in right figure 5.5.

Figure 5.5: Left: monitoring stations in Piemonte region for PM10 pollution levels. Right: its triangulation using 123 vertices. Cameletti et al. (2012) source

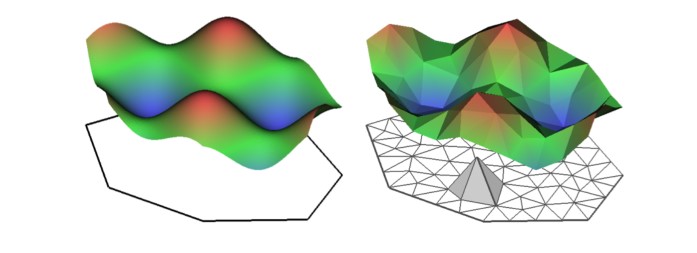

Any triangle height (the size of the spatial field at each vertex triangle) is calculated by weighted sum, with linear interpolation deciding the values within the triangle. Figure 5.6 shows a continously indexed random spatial field (left side of figure 5.6) with the corresponding SPDE on the basis of a triangulation (right panel 5.6).

Figure 5.6: Left: example of a spatial random field where , Right: SPDE representation given a triangulation, Cameletti et al. (2012) source

In INLA mesh triangularization of the region of the study is performed within the function inla.mesh.2d(). Main arguments are:

loc: the coordinates used as initial vertices of mesh,boundary: the borders of the region of the studyoffset: the distance between data positions, which determines the inner and outer extension size,cutoff: minimum distance between points approved.max.edge: value that suggest the current maximum triangle edge lengths in the area and extension. This argument is on the same scale unit as coordinates.min.anglethe opposite of max.edge

Moreover the mesh can also be built on non convex study area by prior passing coordinates on inla.nonconvex.hull() which are then sent back to the inla.mesh.2d(). A convex hull is a polygon of triangles out of the domain area, in other words the extension made to avoid the boundary effect. If borders are available (and they are) are generally preferred over non convex hull meshes. A decent mesh must have triangles of size and shape as regularly as possible (E. T. Krainski 2019).

5.3 Hedonic (rental) Price Models

The theoretical foundation of the Hedonic Price Models (from now on HPM) resides in the consumer utility theory of Lancaster (1966) together with Rosen (1974) market equilibrium. According to Lancaster the utility of a commodity does not exist by itself, instead it exists as the sum of the utilities associated to its separable characteristics. Integrating Lancater, Rosen introduces HPM and suggests that each separate commodity characteristics are priced by the markets on the basis of supply and demand equilibra. Applying HPM to Real Estate in a market context, from the buy side house prices (indeed also rents) are set as the unit cost of each household attributes, conversely from the selling side the expenditures associated to build of each them. Formalizing the results, Hedonic Price in Real Estate is expressed as a general functional form that takes as input the house characteristics vector .

Vector since now might contain a unidentified and presumably vast number of ungrouped characteristics. In this setting Malpezzi (2008) tried to organize house features by decomposing into mutually exclusive and exhaustive subgroups. The vector components involves the house price , which is in a relation with: , the structural characteristics of the house, , the neighborhood characteristics, , the locational characteristics, , the contract conditions and time dimension (not included in the model). is the vector of the parameters to be estimated. Therefore:

However the critical part of studying house characteristics in geostatistics is the estimation and a recent (and not recent) number of emerging trends are observed (Sheppard 1999). Trends, other than the methods presented in this analysis suggests semi-parametric or non-parametric methods and applications of spatial econometrics Ling (2019). Researchers would also contend with problems ranging from variable selection to model specification (2019). For semi-paramametric models a local polynomial regression is developed by Clapp (2003). The model provides a nonlinear term for the measurement of housing position values dependent on latitudes and longitudes. Proper geoadditive models family was originally proposed by Kammann and Wand (2003), which offers a combination of additive modeling (Buja, Hastie, and Tibshirani 1989) and a geostatistical component. As Ling (2019) points out the candidates for the spatial component are many, e.g. kriging component (Dey, Mukhopadhyay, and Adhikari 2017) (which will be then subsituted with a GP 5.1) or a smooth spatial trend component based on a tensor product of longitude and latitude for which Basile, Benfratello, and Castellani (2013) have investigated European industrial agglomeration externalities. The model outshines the other parameteric model performances by better managing spatial unobserved patterns. Furthermore they made available a third study dimension which is time (2019). Spatial econometrics’ evolution trends are seen in Shi and Lee (2017) and lately in Anselin (2010) where point referenced data are modeled with endogenous time varying spatial weights matrices and unobserved common factors and ultimately are fitted with traditional bayesian estimation methods as MCMC (2010). Then two further interesting modeling approach does not fall perfectly in the categories, but are higly considered in literature within the Hedonic Price models. Dubé and Legros (2013) recognize that the modeling tools available analyzing point referenced data were not sufficient to take into account all the dimensions according to which they want to evaluate the phenomenon. They acknowledge that Moran’s I index and his statistics test relies mainly on an exogenous specification of a spatial weights matrix (2013). As a result they assemble a spatio‐temporal weights matrix to evaluate spatial dependence through Moran’s I index whose application is on real estate data (selling) for Québec City from 1986 to 1996. The second approach was addressed in Baltagi, Bresson, and Etienne (2015) whose object is price estimation based on flats sold in the city of Paris over the period 1990–2003. This is a rich and unbalanced pseudo‒panel data which are modeled with spatial lag. Results displayed a nested structure of the Paris housing data, which have been tested with likelihood ratio. A similar approach was followed by Nardelli and Arbia (Arbia and Nardelli 2020) which collected crowdsourced as well as webscraped (as in section 2) data and lately applied Spatial Lag Model (SLM) on a “post-sampled” (Arbia et al. 2020) version of the same. As a result bias in the model is diminished, indeed variance of the estimators is increased. A further aspect of the problem is posed by scholars not considering rents to be representative for the actual value of the real estate due to heavier legislation on rent and indebtedness (for selling). As a consequence predictors choice and functional relatioship should be different from the selling. Nevertheless in many empirical analysis rent values are considered as a proxy for real estate pricing when considered in long-run (Herath and Maier 2011). A further argument to endorse this hypothesis is brought by Manganelli, Morano, and Tajani (2013) considering housing a commodity, then the selling or the rental option should be interchangeable economic actions with respect to same inner need to be satisfied. This assumption is also stronger in this context since Manganelli, Morano, and Tajani have centered their analysis on italian Real Estate data. Moreover Capozza and Seguin (1996) discussed on how much rent-price ratio predicts future changes both in rents and prices. Among all the other points raised they brought the decomposition of rent-price ratio into two parts: the predictable part and the unexplained residuals part. The predictable part was discovered to be negatively correlated with price changes, in other words cities in which prices are relatively high with respect to rents are associated with higher capital gains that might justify that misalignment. This is also true for the opposite, that is cities in which prices are lower with respect to the rents, and this effect can not be associated to any local condition, realize lower capital gains. A further argument is offered by Clark (Clark 1995) which went after the Capozza and Seguin work. Rent-price ratio is negatively correlated with following future changes in rents. In other words prices are still higher when areas in which they are observed documents an increase in rent prices.

5.4 Model Criticism

Since INLA can fit a wide range of models then the flexibility should be reflected by a mouldable tool to check model suitability. Model criticism may regard the research on which variables are used in the model, which assumptions are made on parameters and on the likelihood as well as the prior choice addressed in 5.5. However here are two common modi operandi which are also implemented inside inla: one based on predictive distribution and the other on deviance. Note that all specifications mentioned in this analysis can be determined by setting the option in control.compute.

5.4.1 Methods based on the predictive distribution

One of the most used method to assess Bayesian model quality is LOOCV, i.e. Leave One Out Cross Validation and it is also default choice in INLA. Assume to have data from which it is left out one single observation, as a result the assessment set is and the validation set is , where the notation is the same for chapter 4. Two metrics are assumed to be demonstrative:

- CPO Conditional Predictive Ordinate (Pettit 1990): , For any observation the CPO is the posterior probability of observing the left out when the model is fitted with assessment set. High values imply that the model suits well the data, while small values indicate a poor fit, i.e. outlier. (Gómez Rubio 2020). The negative log-summation (LPML Geisser and Eddy (1979)) of each CPO gives a cross-validatory summary measure of fit (Wang, Yue, and Faraway 2018) i.e.

- PIT Predictive Integral Transform (Marshall and Spiegelhalter 2007): . PIT computes the probability of a new response value being smaller than the observed real value and it is calculated for each left out observation:

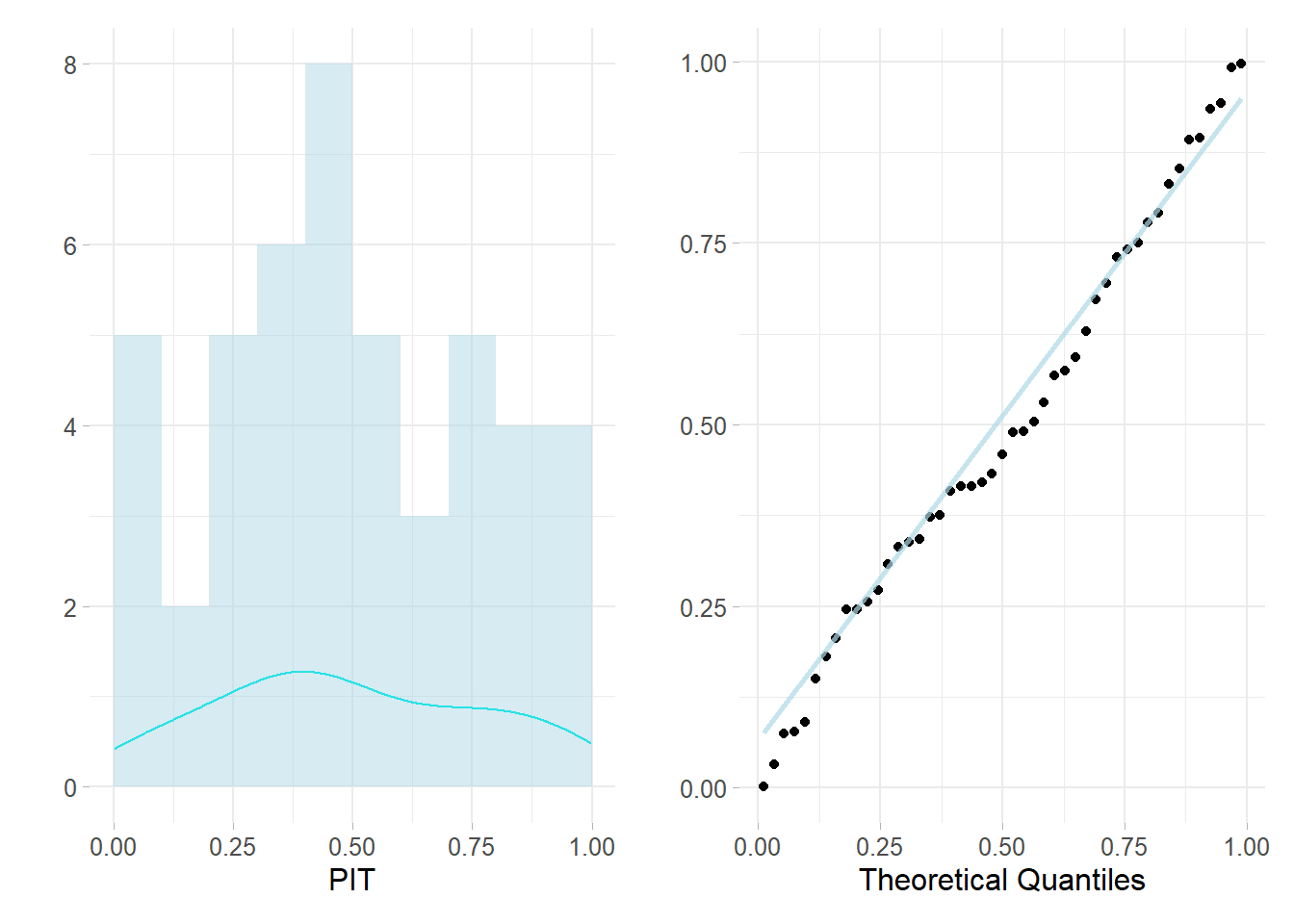

Inla also provides for both of the predictive methods an inner functionality to automatically handle abnormalities while computing the two quantities. Inla encodes values with 1 when predictions are not reliable, otherwise they are set equal to 0. Moreover the empirical distribution of the PIT can be used to asses predictive performance: if it is Uniform, so there are not values that significantly differ from the others then the model suits the data. Otherwise if the distribution almost approximates any of the other one then the “out of the bag” prediction suggest a model miss-pecification.

For example assume to have data from a 1970’s study on the relationship between insurance redlining in Chicago and racial composition, fire and theft rates, age of housing and income in 47 zip codes (inherited by brinla(Faraway, Yue, and Wang 2021)). Assume also to fit a linear model whose response is “involact” being new FAIR plan policies and renewals per 100 housing units and the rest are predictors, i.e. . Then the resulting LPML is: -20.6402106 Furthermore in left panel of fig. 5.7 the resulting cross-validated PIT resembles a Uniform distribution which is also highlighted whose density is highlighted in tone in the lower part. In the right side a Quantile-Quantile for a Uniform (whose parameter are mean 0 and std 1) plot evidences how much the points are attached to the diagonal, confirming the well behaved model.

Figure 5.7: Left: histogram of cross-validated PIT, Right: QQ plot for Unif(0,1)

Posterior Predictive checking methods (Gelman, Meng, and Stern 1996) exploit a full cross-validation where , operating on the full set of observation. The statistics capitalized below are quite commonly used in practice, but they are high context dependent:

- the posterior predictive distribution: which is the likelihood of a replicate observation. When values are small that indicates that are those values are coming from tails, since the area under the curve (i.e. probability) is less. If this happens for many observation then outliers are driving the model leading to poor estimates

- the posterior predictive p-value whose math expression is: for which values near to 0 and 1 indicates poor performances.

- the Root Mean Square Predictive Error RMSE:

R-INLA has already antiticipated in chapter 4 section?? have dedicated function to compute statistics on posterior distribution e.g. inla.pmarginal() returning the cumulative density distribution.

5.4.2 Deviance-based Criteria

If there is an interest in comparing multiple models, then their deviance may be used. Given data and its likelihood function, along with its parameters then the deviance is:

The model’s deviance tests the likelihood variability conditioned to its parameters. Since this is a random variable tt can be analyzed by several statistics such as mean, median, mode etc. The most used is the posterior mean deviance i.e. which is also robust (Blangiaro-Cameletti?). Indeed it suffers from cost complexity, as a result DIC proposed by Spiegelhalter et al. (2002) adds to the deviance a penalization for complex model i.e. from which, following (Blangiaro-Cameletti?) obtain,

Data is best served in models with small-scale DICs and the correspondent INLA option setting is analogous to the ones seen sec. 5.4.1. INLA moreover take advantage of the hierarchical structure and computes different posterior deviances for latent parameters i.e. mean and hyper parameters i.e. mode (due to skewness). For further discussions Spiegelhalter et al. (2014) oppose DIC with other criteria for model comparison. Finally the Watanabe Akaike information criterion (WAIC) which is more Bayesian orthodox in setting up the criteria, for this reason is also more preferred (Gelman, Hwang, and Vehtari 2014).

5.5 Penalized Complexity Priors

The priors choice is the most central part of a Bayesian analysis and at the same time the weakest since any selection might be criticized. Priors expression involves a considerably high amount of subjectivity and domain experience which may be imported from other comparable literature works or results. However according to purists Bayesian priors should be decided a-priori, without either looking at the data, nor the posterior results. This can be tedious since many models are sensitive to priors, as evidenced in the example in sec. 4.4. Priors may negatively impact posteriors when are wrong and they usually require a later revision. The choice in this context is also more difficult since it is also constrained to LGM requirements for which the latent field demands them to be jointly Gaussian. Simpson et al. (2017) provide a solid backbone knowledge on priors specification in Hierarchical models by setting up 4 guidelines principles on top of which it is built a new prior class called Penalized Complexity (PC) priors. Before jumping into the principles it is needed an abstract concept that goes by the name of “base model.” For a general density which is controlled by a flexibility parameter the base model is the most uncomplicated one in the class. Following the notation this would be the model corresponding to . The base model ideally grabs the parameter choice with the lowest possible complexity given the model and set it as a ground benchmark. The following example regards base model for a a Gaussian Random effect and it considers a multivarite gaussian distributed with mean 0 and precision matrix , i.e. , where . The base model tries to put the mass (2017) on since the base model in this case is a model without the random effect. At this point it is possible to present the building blocks, first priciple is Occam’s razor according to which priors should be weighted down in fuction of the distance between the added complexity and the base model i.e. simpler models are preferred. The second principle regards how it is measured complexity whose solution is found in KLD (Kullback–Leibler divergence), calculating distance from base model. KLD in this context along with principle 1 affirms that the prior shall have high mass in areas where replacing the base model with the flexible model will not cause any information loss. Principle 3 is constant rate penalization as names suggest relates the distance to a constant rate penalization whose assumption implies an exponential prior on the distance scale, i.e. . In the end, the PC prior is described in the required scale with probability statements on the model parameters. Statement regards the selection of two further user defined (here the subjectivity) hyper-parameters, regulating the tail event and the mass to put to this event such that . A prior satisfying all the requirements is said Penalized in Complexity. Priors of this class should be then derived with respect to the specific content they are needed. In this context PC priors are seen for Gaussian Random effect, i.e. the GP 5.1 modeling spatial dependence for which a precision hyper parameter is demanded. The PC prior for the precision is on standard deviation scale .

Then the GMRF latent field has mean 0 and covariance matrix , i.e. . The base model considers the absence of the random effect which implies . Then the derived PC prior (Simpson et al. 2017) takes the form of an exponential distribution, i.e. “type-2 Gumbel distribution” eq: (5.2), whose rate parameter is determining the severity of the penalty when diverging from the base model. Note that the parameter is on the standard deviation scale (in contrast to the Gamma on the precision seen in 4.4).

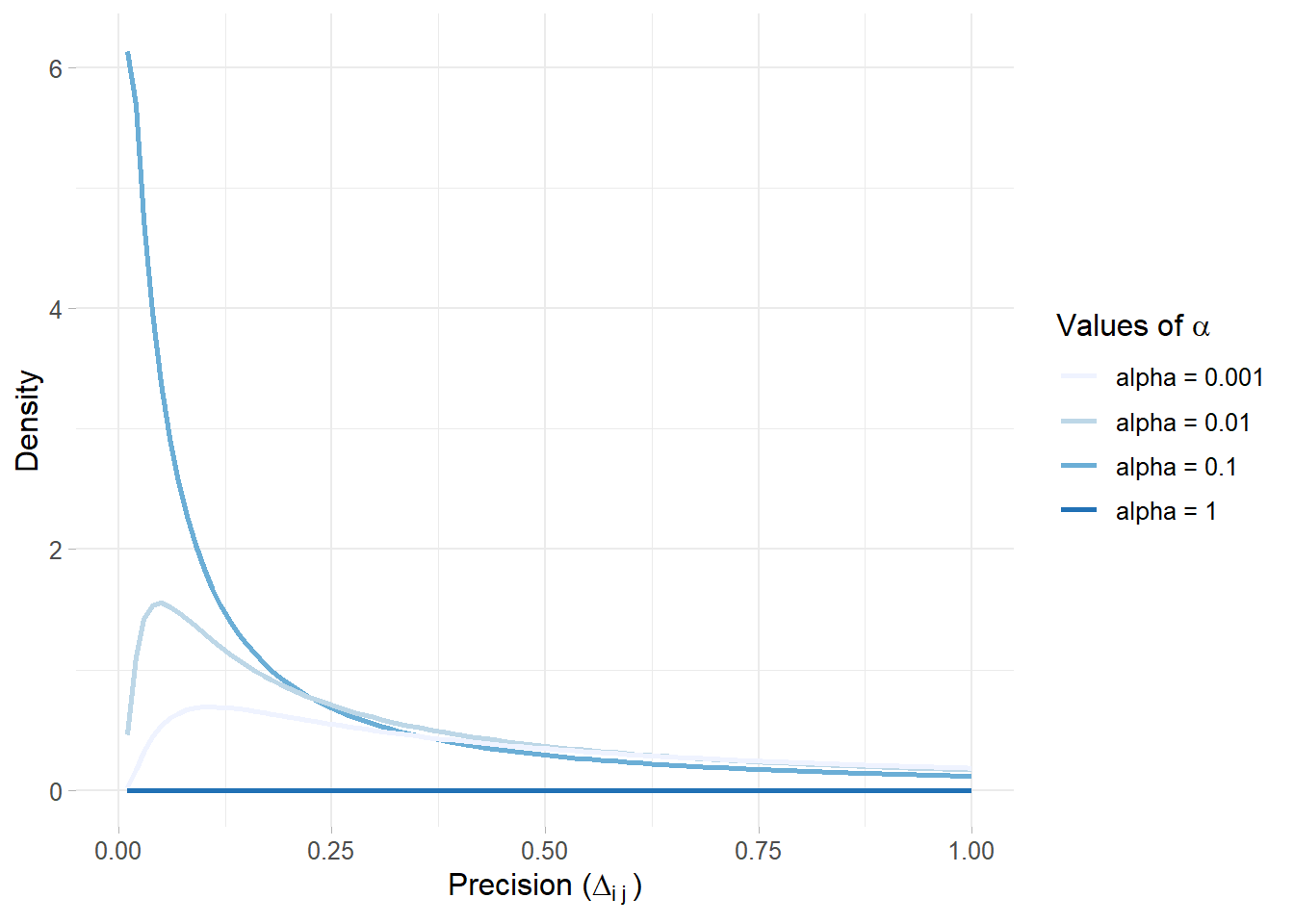

The distribution depends on a rate parameter that regulates the penalty according to the probability natural criterion (slides?) . in this case is an upper limit for the standard deviation (being in the base model) and a small probability. The probability statement aforementioned implies the follwing realtion . Some further investigations of Simpson et al. (2017) acknowledged that parameters upper bounds for are , and , where alpha regulates the importance of the prior believe. Ideally it would be picked up drawing on previous experience. Indeed a reasonable choice in the absence of knowledge might be taking the semivariogram range 5.1, i.e. where the spatial dependece disappears. PC priors are natively implemented in INLA and are shown to be well suited for the modular additive definition (Gómez Rubio 2020). As a consequence they are selected as prior candidates with a reasonable selection and no sensitivity analysis is performed i.e. evalutaing posterior disstribution while prior are changing.

Fig. 5.9 indicates various PC priors using several values for the precision . Note that increasing ’s values contribute to a stronger prior belief for which then leads to a higher conviction of .

Figure 5.9: PC priors for the precision by varying alpha values and fixing