Chapter 4 INLA

Bayesian estimation methods - by MCMC (Brooks et al. 2011) and MC simulation techniques - are usually much harder than Frequentist calculations (Wang, Yue, and Faraway 2018). This unfortunately is also more critical for spatial and spatio-temporal model settings (Cameletti et al. 2012) where matrices dimensions and densities (in the sense of prevalence of values throughout the matrix) start becoming unfeasible.

The computational aspect refers in particular to the ineffectiveness of linear algebra operations with large dense covariance matrices that in aforementioned settings scale to the order .

INLA (Håvard Rue, Martino, and Chopin 2009; Håvard Rue et al. 2017) stands for Integrated Nested Laplace Approximation and constitutes a faster and accurate deterministic algorithm whose performance in time indeed scale to the order . INLA is alternative and by no means substitute (Rencontres Mathématiques 2018) to traditional Bayesian Inference methods. INLA focuses on Latent Gaussian Models LGM (2018), which are a rich class including many regressions models, as well as support for spatial and spatio-temporal.

INLA turns out to shorten model fitting time for essentially two reasons related to clever LGM model settings, such as: Gaussian Markov random field (GMRF) offering sparse matrices representation and Laplace approximation to approximate posterior marginals’ integrals with proper search strategies.

In the end of the chapter it is presented the R-INLA project and the package focusing on the essential aspects.

Further notes: the chronological steps followed in the methodology presentation retraces the canonical one by Håvard Rue, Martino, and Chopin (2009), which according to the author’s opinion is the most effective and the default one for all the related literature. The approach is more suitable and remains quite unchanged since it is top-down, which naturally fits the hierarchical framework imposed in INLA. The GMRF theory section heavily relies on Havard Rue and Held (2005).

Notation is imported from Michela Blangiardo Marta; Cameletti (2015) and integrated with Gómez Rubio (2020), whereas examples are drawn from Wang, Yue, and Faraway (2018). Vectors and matrices are typeset in bold i.e. , so each time they occur they have to be considered such as the ensamble of their values, whereas the notation denotes all elements in but .

is a generic notation for the density of its arguments and has to be intended as its Laplace approximation. Furthermore Laplace Approximations mathematical details, e.g. optimal grid strategies and integration points, are overlooked instead a quick intuition on Laplace functioning is offered in the appendix 8.2.

4.1 The class of Latent Gaussian Models (LGM)

Bayesian theory is straightforward, but it is not always simple to measure posterior and other quantities of interest (Wang, Yue, and Faraway 2018). There are three ways to obtain a posterior estimate: by Exact estimation, i.e. operating on conjugate priors, but there are relatively few conjugate priors which are also employed in simple models. By Sampling through generating samples from the posterior distributions with MCMC methods (Metropolis et al. 1953; Hastings 1970) later applied in Bayesian statistics by Gelfand and Smith (1990). MCMCs have improved over time due to inner algorithm optimization as well as both hardware and software progresses, nevertheless for certain model combinations and data they either do not converge or take an unacceptable amount of time (2018). By Approximation through numerical integration and INLA can count on a strategy leveraging on three elements: LGMs, Gaussian Markov Random Fields (GMRF) and Laplace Approximations and this will articulates the steps according to which the arguments are treated. LGMs despite their anonymity are very flexible and they can host a wide range of models as regression, dynamic, spatial, spatio-temporal (Cameletti et al. 2012). LGMs necessitate further three interconnected elements: Likelihood, Latent field and Priors. To start it can be specified a generalization of a linear predictor which takes into account both linear and non-linear effects on covariates:

where is the intercept, are the coefficients that quantifies the linear effects of covariates and are a set of random effects defined in terms of a set of covariates e.g. Random Walks (Havard Rue and Held 2005), Gaussian Processes (Besag and Kooperberg 1995), such models are termed as General Additive Models i.e. GAM or Generalized Linear Mixed Models GLMM (Wang, Yue, and Faraway 2018). For the response it is specified an exponential family distribution function whose mean (computed as its expectation ) is linked via a link function to in eq. (4.1), i.e. . At this point is possible to group all the latent (in the sense of unobserved) inference components into a variable, said latent field and denoted as such that: , where each single observation is connected to a combination of parameters in . The latent parameters actually may depend on some hyper-parameters . Then, given , the joint probability distribution function conditioned to both parameters and hyper-parameters, assuming conditional independence, is expressed by the likelihood:

The conditional independence assumption grants that for a general couple of conditionally independent and , where , the joint conditional distribution is factorized by (Michela Blangiardo Marta; Cameletti 2015), i.e. the likelihood in eq:(4.2). The assumption constitutes a building block in INLA since as it will be shown later it will assure that there will be 0 patterns encoded inside matrices, implying computational benefits. Note also that the product index ranges from 1 to , i.e. . In the case when an observations are missing, i.e. , INLA automatically discards missing values from the model estimation (2020), this would be critical during missing values imputation sec. 6.6. At this point, as required by LGM, are needed to be imposed Gaussian priors on each linear effect and each model covariate that have either a univariate or multivaried normal density in order to make the additive Gaussian (2018). An example might clear up the setting requirement: let us assume to have a Normally distributed response and let us set the goal to specify a Bayesian Generalized Linear Model (GLM). Then the linear predictor can have this appearance , where is the intercept and is the slope for a general covariate . While applying LGM are needed to be specified Gaussian priors on and , such that: and , for which the latent linear predictor is . It can be illustrated by some linear algebra (2018) that is a Gaussian Process with mean structure and covariance matrix . The hyperparameters and are to be either fixed or estimated by taking hyperpriors on them. In this context can group all the latent components and is the vector of Priors. For what it can be noticed there is a clear hierarchical relationship for which three different levels are seen: a higher level represented by the exponential family distribution function on , given the latent parameter and the hyper parameters. The medium by latent Gaussian random field with density function given some other hyper parameters. The lower by the joint distribution or a product of several distributions for which priors can be specified So letting be at the higher level it is assumed an exponential family distribution function given a first set of hyper-parameters , usually referred to measurement error precision Michela Blangiardo Marta; Cameletti (2015)). Therefore as in (4.2),

At the medium level it is specified on the latent field a latent Gaussian random field (LGRF), given i.e. the rest of the hyper-parameters,

where denotes positive definite matrix and its determinant. is the transpose operator. The matrix is called the precision matrix that outlines the underlying dependence structure of the data, and its inverse is the covariance matrix (Wang, Yue, and Faraway 2018). In the spatial setting this would be critical since by a specifying a multivariate Normal distribution of eq. (4.4) it will become a GMRF. Due to conditional independence GMRF precision matrices are sparse and through linear algebra and numerical method for sparse matrices model fitting time is saved (Havard Rue and Held 2005). In the lower level priors are collected togheter for which are specified either a single prior distribution or a joint prior distribution as the product of its independent priors. Since the end goal is to find the joint posterior for and , then given priors it possible to combine expression (4.3) with (4.4) obtaining:

Which can be further solved following (Michela Blangiardo Marta; Cameletti 2015) as:

From which the two quantities of interest are the posterior marginal distribution for each element in the latent field and for each hyper parameter.

From final eq: (4.6) is derived Bayesian inference and INLA through Laplace can approximate posterior parameters distributions. Sadly, INLA cannot effectively suit all LGM’s. In general INLA depends upon the following supplementary assumptions (2018):

- The hyper-parameter number should be unpretentious, normally between 2 and 5, but not greater than 20.

- When the number of observation is considerably high ( to ), then the LGMR must be a Gaussian Markov random field (GMRF).

4.2 Gaussian Markov Random Field (GMRF)

In the order to make INLA working efficiently the latent field must not only be Gaussian but also Gaussian Markov Random Field (from now on GMRF). A GMRF is a genuinely simple structure: It is just random vector following a multivariate normal (or Gaussian) distribution (Havard Rue and Held 2005). However It is more interesting to research a restricted set of GMRF for which are satisfied the conditional independence assumptions (section 4.1), from here the term “Markov.” Expanding the concept of conditional independece let us assume to have a vector where and are conditionally independent given , i.e. . With that said if the objective is , then uncovering gives no information on . The joint density for is

Now let us assume a more general case of AR(1) exploiting the possibilities of defining function through the eq. (4.1). AR(1) is an autoregressive model of order 1 specified on the latent linear predictor (notation slightly changes using instead of since latent components are few), with constant variance and standard normal errors (2005; 2018). The model may have following expression:

Where pedix is the time index and is the correlation in time. The conditional form of the previous equation can be rewritten when :

Then let us also consider the marginal distribution for each , it can be proven to be Gaussian with mean 0 and variance (2018). Moreover the covariance between each general and is defined as which vanishes the more the distance increases. Therefore is a Gaussian Process, whose proper definition is in ??, with mean structure of 0s and covariance matrix i.e. . is an dense matrix that complicates computations. But by a simple trick it is possible to recognize that AR(1) is a special type of GP with sparce precision matrix which is evident by showing the joint distribution for

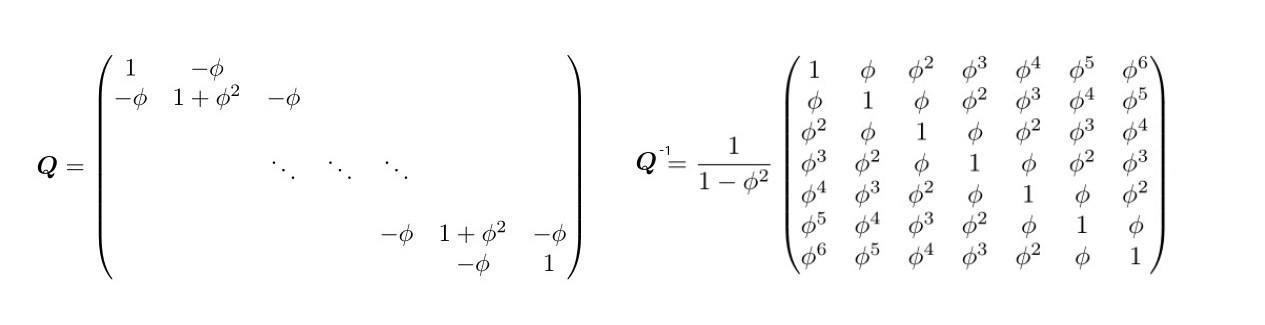

whose precision matrix compared to its respective covariance matrix is:

Figure 4.1: Precision Matrix in GMRF vs the Covariance matrix, source Havard Rue and Held (2005)

with zero entries outside the diagonal (right panel fig. 4.1) and first off-diagonals (2005). The conditional independence assumption makes the precision matrix tridiagonal since for generals and are conditionally independent for , given all the rest. In other words is sparse since given all the latent predictors in , then depends only on the preceding . For example in (4.9), let assume to have and , then:

For which the conditional density of does only depend on its preceding term i.e. . The same inner reasoning can be done for , which strictly depends on and vice versa. Therefore ultimately it is possible to produce a rather formal definition of a GMRF:

4.3 INLA Laplace Approximations

The goals of the Bayesian inference are the marginal posterior distributions for each of the elements of the latent field. INLA is not going to try to approximate the whole joint posterior marginal distribution from expression (4.6) i.e. , in fact if it would (two-dimensional approx) it will cause a high biased approximations since it fail to capture both location and skewness in the marginals. Instead INLA algorithm will try to estimate the posterior marginal distribution for each in the latent parameter , for each hyper-parameter prior (back to the latent field notation).

The mathematical intuition behind Laplace Approximation along with some real life cases are contained in the appendix in sec. 8.2.

Therefore the key focus of INLA is to approximate with Laplace only densities that are near-Gaussian (2018) or replacing very nested dependencies with their more comfortable conditional distribution which ultimately are “more Gaussian” than the their joint distribution. Into the LGM framework let us assume to observe counts, i.e. drawn from Poisson distribution whose mean is . Then a the link function is the and relates with the linear predictor and so the latent filed , i.e. . The hyper-parameters are with their covariance matrix structure.

Let us also to assume once again to model with an AR(1). Then fitting the model into the LGM, at first requires to specify an exponential family distribution function, i.e. Poisson on the response . then the higher level (recall last part sec. 4.1) results in:

Then the medium level is for the latent Gaussian Random Field a multivariate gaussian distribution :

and the lower, where it is specified a joint prior distribution for , which is . Following eq.(4.5) then:

Then recalling the goal for Bayesian Inference, i.e.approximate posterior marginals for and and . First difficulties regard the fact that Laplace approximations on this model implies the product of a Gaussian distribution and a non-gaussian one. As the INLA key point suggest, the algorithm starts by rearranging the problem so that the “most Gaussian” are computed at first. Ideally the method can be generally subdivided into three tasks. At first INLA attempts to approximate as the joint posterior of . Then subsequently will try to approximate to their conditional marginal distribution fro . In the end explores with numerical methods for integration. The corresponding integrals to be approximated are:

- for task 1:

- for task 2:

As a result the approximations for the marginal posteriors are at first:

and then,

Where in the integral in (4.12) are some relevant integration points and are weights associated to the set of hyper-parameters ina grid. (Michela Blangiardo Marta; Cameletti 2015). In other words the bigger the weight the more relevant are the integration points. Details on how INLA finds those points is beyond the scope, an indeep resource if offered by Wang, Yue, and Faraway (2018) in sec. 2.3.

4.4 R-INLA package

INLA library and algorithm is developed by the R-INLA project whose package is available on their website at their source repository. Users can also enjoy on INLA website (recently restyled) a dedicated forum where discussion groups are opened and an active community is keen to answer. Moreover It also contains a number of reference books, among which some of them are fully open sourced. INLA is available for any operating system and it is built on top of other libraries still not on CRAN.

The core function of the package is inla()and it works as many other regression functions like glm(), lm() or gam(). Inla function takes as argument the model formula i.e. the linear predictor for which it can be specified a number of linear and non-linear effects on covariates as seen in eq. (4.1), the whole set of available effects are obtained with the command names(inla.models()$latent). Furthermore it requires to specify the dataset and its respective likelihood family, equivalently names(inla.models()$likelihood).

Many other methods in the function can be added through lists, such as control.family and control.fixed which let the analyst specifying parameter and hyper-paramenters priors family distributions and control hyper parameters. They come in the of nested lists when parameters and hyper paramenters are more than 2, when nothing is specified the default option is non-informativeness.

Inla output objects are inla.dataframe summary-lists-type containing the results from model fitting for which a table is given in figure 4.3.

Figure 4.3: outputs for a inla() call, source: E. T. Krainski (2019)

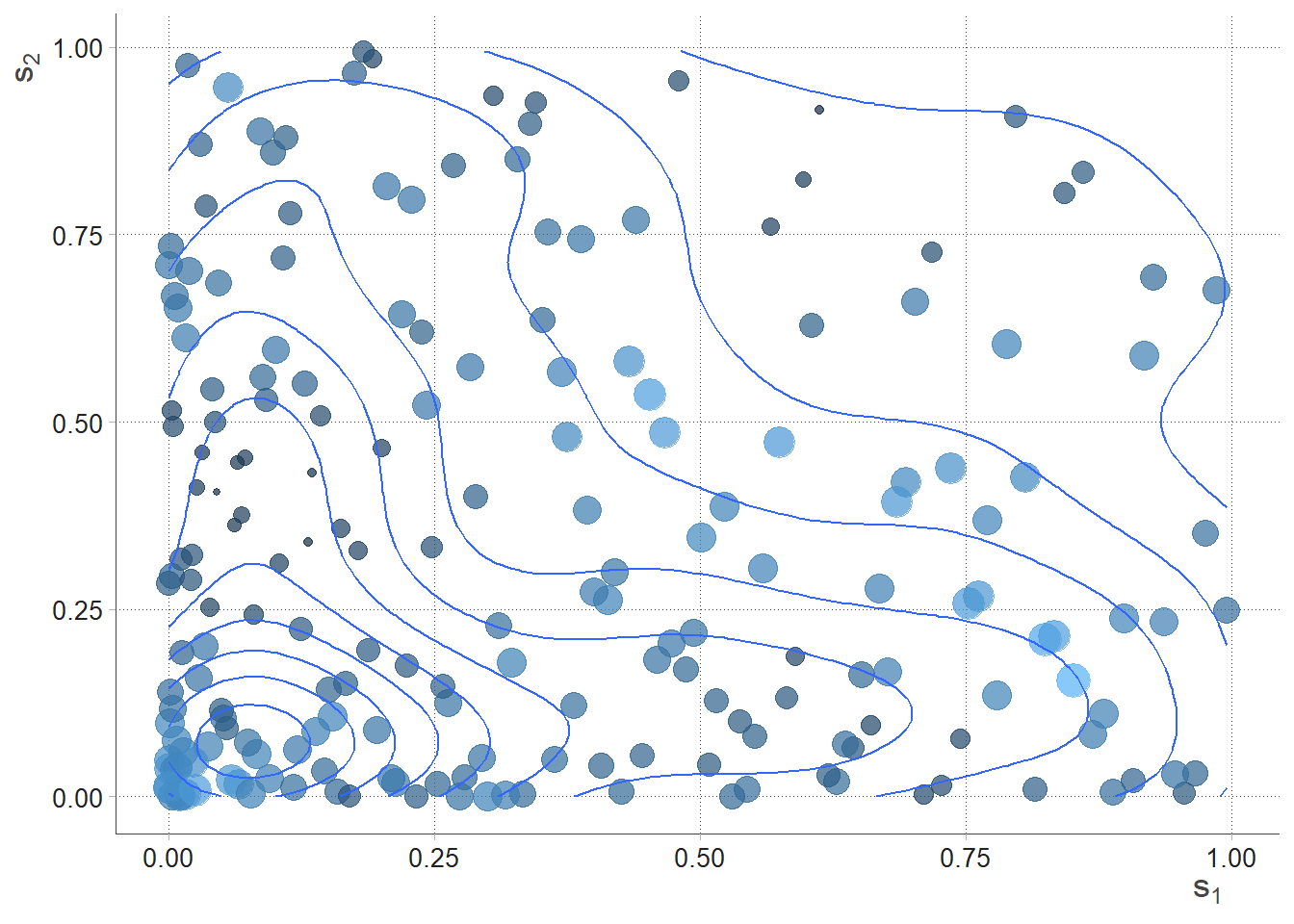

SPDEtoy dataset are two random variables that simulates points location in two coordinates and .

Figure 4.4: SPDEtoy bubble plot, author’s source

Imposing an LGM model requires at first to select as a higher hierarchy level a likelihood model for i.e. Gaussian (by default), and a model formula (eq. (4.1)), i.e. , which link function is identity. There are not Non-linear effects effect on covariates in $$ nevertheless they can be easily added with f() function. Note that this will allow to integrate random effects i.e. spatial effects inside the model. Secondly in the medium step a LGRF on the latent parameters . In the lower end some priors distributions which are Uniform for the intercept indeed Gaussian vagues (default) priors i.e. centered in 0 with very low standard deviation. Furthermore the precision hyper parameter which accounts for the variance of the latent GRF, is set as Gamma distributed with parameters and (default). Note that models are sensitive to prior choices (sec. 5.5), as a consequence if necessary later are revised.

A summary of the model specifications are set below:

Then the model is fitted within inla() call, specifying the formula, data and the exponential family distribution.

formula <- y ~ s1 + s2

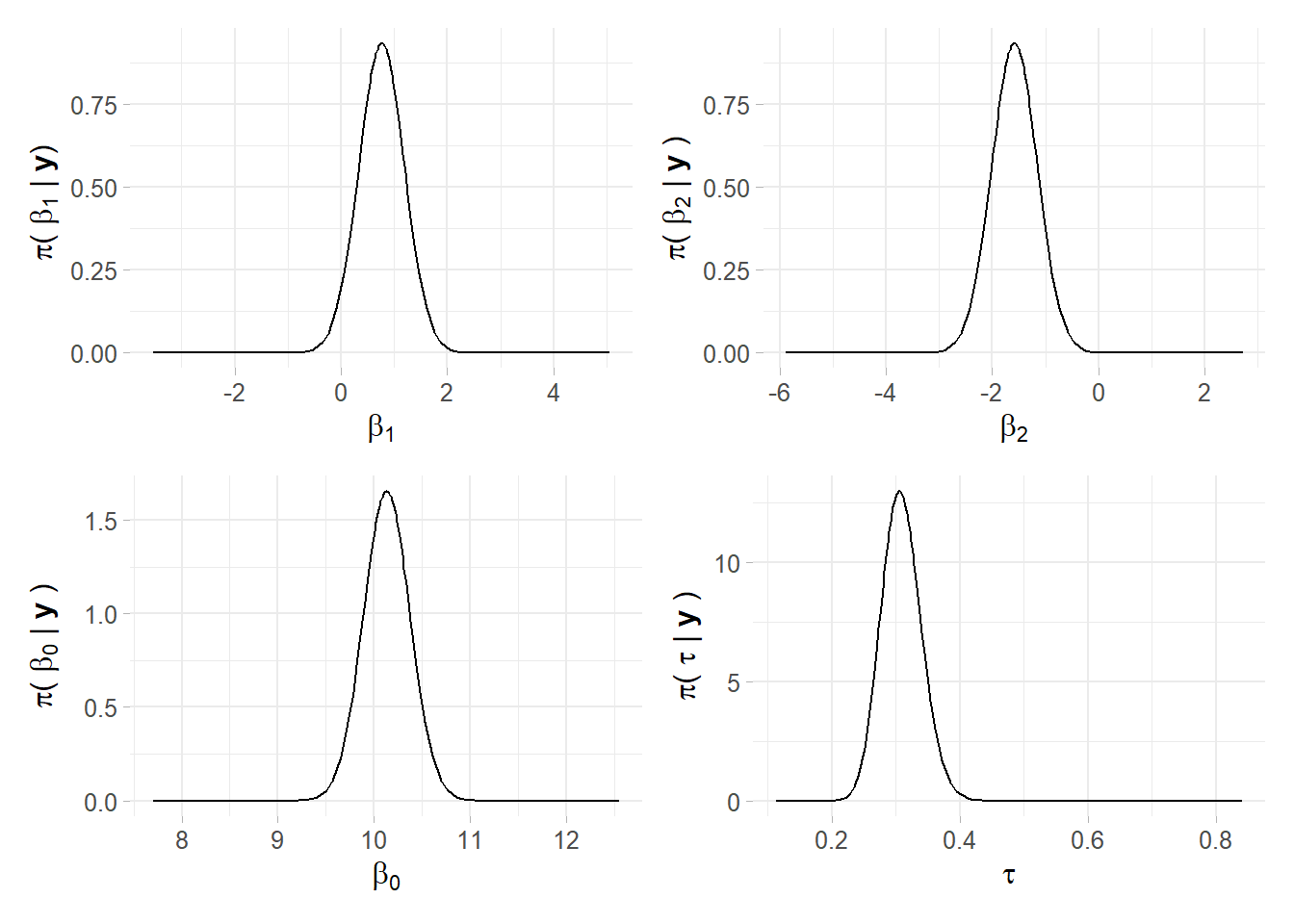

m0 <- inla(formula, data = SPDEtoy, family = "gaussian")Table 4.1 offers summary of the posterior marginal values for intercept and covariates’ coefficients, as well as precision. Marginals distributions both for parameters and hyper-parameters can be conveniently plotted as in figure 4.6. From the table it can also be seen that the mean for is negative, so the Norther the y-coordinate, the less is response. That is factual looking at the SPDEtoy contour plot in figure 4.4 where bigger bubbles are concentrated around the origin.

| coefficients | mean | sd |

|---|---|---|

| (Intercept) | 10.1321487 | 0.2422118 |

| s1 | 0.7624296 | 0.4293757 |

| s2 | -1.5836768 | 0.4293757 |

Figure 4.6: Linear predictor marginals, plot recoded in ggplot2, author’s source

In the end R-INLA enables also r-base fashion function to compute statistics on marginal posterior distributions for the density, distribution as well as the quantile function respectively with inla.dmarginal, inla.pmarginal and inla.qmarginal. One option which allows to compute the higher posterior density credibility interval inla.hpdmarginal for a given covariate’s coefficient i.e, , such that (90% credibility), whose result is in table below.

| low | high | |

|---|---|---|

| level:0.9 | -2.291268 | -0.879445 |

Note that the interpretation is more convoluted (2018) than the traditional frequentist approach: in Bayesian statistics comes from probability distribution, while frequenstists considers as fixed unknown quantity whose estimator (random variable conditioned to data) is used to infer the value (2015).